KEEP UP WITH OUR DAILY AND WEEKLY NEWSLETTERS

PRODUCT LIBRARY

the two films highlight AI's ability to provide insights into global ecosystems, emphasizing its role in analysing data to predict and mitigate risks.

connections: +540

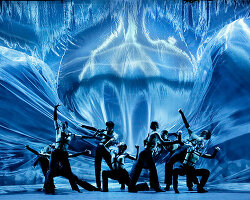

designboom is presenting the sound machines of love hultén at sónar festival in barcelona this june!

connections: 85

BMW releases the upgraded vision neue klasse X, with a series of new technologies and materials especially tailored for the upcoming electric smart car.

following the unveiling at frieze LA 2024, designboom took a closer look at how the color-changing BMW i5 flow NOSTOKANA was created.

connections: +640