KEEP UP WITH OUR DAILY AND WEEKLY NEWSLETTERS

PRODUCT LIBRARY

using a model from 1990s, the trio turns the vehicle into an automotive art piece with boom boxes on the removable roof.

tesla plans to begin production of the fully autonomous taxi over the next two years.

connections: +1170

in an interview, andrea zagato and marella rivolta zagato discuss their timeless art of coachbuilding in an evolving automotive industry.

connections: +920

in an interview with designboom, the french designer explores the design process and influences of the electric restomod, joined by renault’s sandeep bhambra.

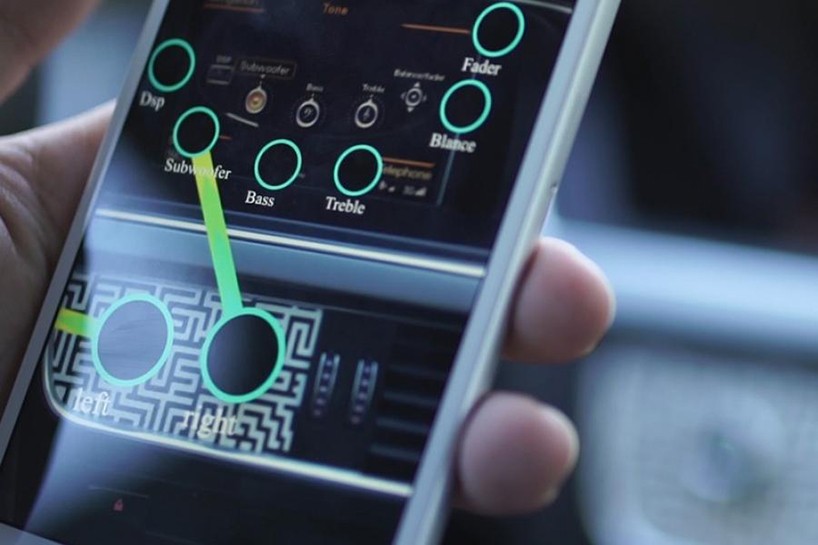

re-designed QR codes for recognizing objects

re-designed QR codes for recognizing objects the interface draws lines for connecting actions

the interface draws lines for connecting actions  even chairs can be outfitted with the platform to offer added statistics

even chairs can be outfitted with the platform to offer added statistics