KEEP UP WITH OUR DAILY AND WEEKLY NEWSLETTERS

happening now! ISE 2026 showcases how high-performance sound and smart technologies transform residential spaces into stress-reducing environments.

a modular device built around a physical keyboard, the case introduces a full QWERTY typing tool that attaches magnetically.

connections: +470

ori eliminates ribs, fabric, and typical failure points, creating an entirely new category of personal weather device.

connections: 36

the concept trike was inspired by a vision of getting around airports more efficiently.

connections: +430

SOLARIS features retractable photovoltaic wings that form the core of the motorcycle's solar-harvesting system.

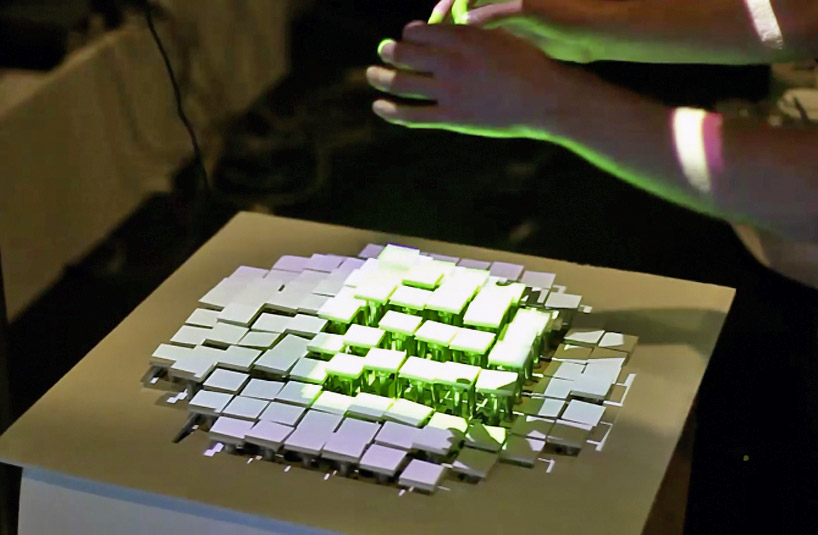

the green light cast from a mounted projector indicates the selected area, while the user’s hand gesture instructs the device to raise these keys

the green light cast from a mounted projector indicates the selected area, while the user’s hand gesture instructs the device to raise these keys ‘recompose’ responds to both tactile and gestural input

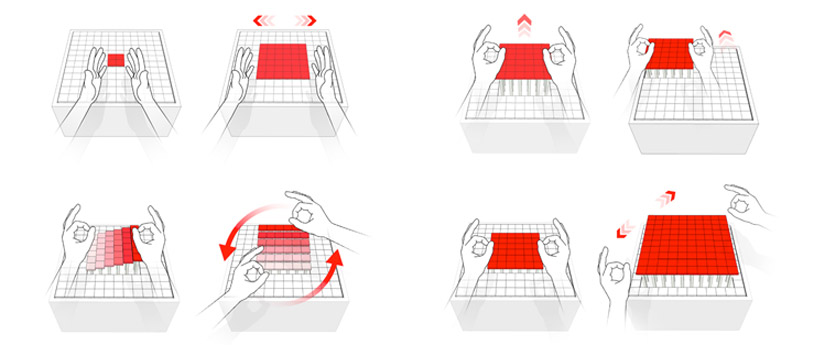

‘recompose’ responds to both tactile and gestural input concept diagram of gestural interactions, clockwise from top left: selection (2 images), actuation, translation, scaling (both images), and rotation (both images).

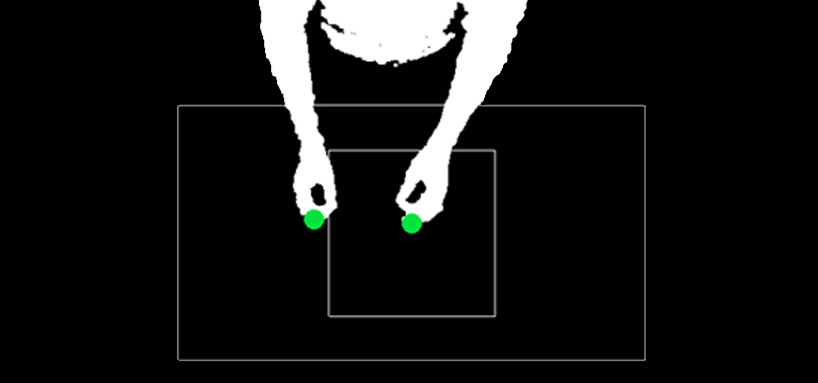

concept diagram of gestural interactions, clockwise from top left: selection (2 images), actuation, translation, scaling (both images), and rotation (both images). the view of input as modeled through computer vision

the view of input as modeled through computer vision